Experiment 3

Binary classification with validation set implementation.

Parameters

basic setting parameters

clip_length: 5.0 # [sec]

preprocessing parameters

sample_rate: 44100

hop_length: 512

n_fft: 1024

n_mels: 64

Training parameters

number of audio samples: 220500

learning rate: 0.001

batch size: 30

number of epochs: 20

number of samples: 72

balanced dataset: False

random clip cut: False

Classes

Labels

grime

jazz

Class distribution

| Dataset | Class | Class ID | Samples |

|---|---|---|---|

| Train | grime jazz | [0, 1] | 60 |

| Validation | grime jazz | [0, 1] | 12 |

Metrics

Logs

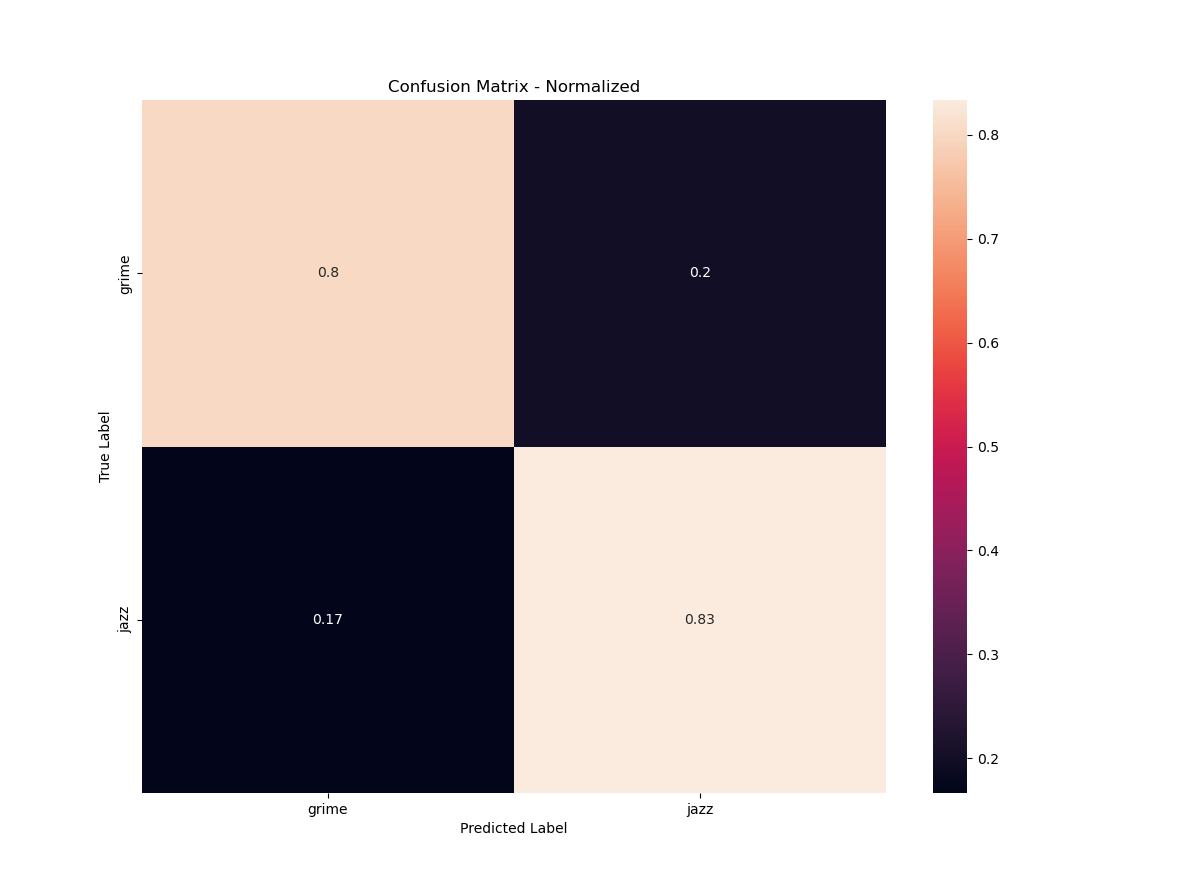

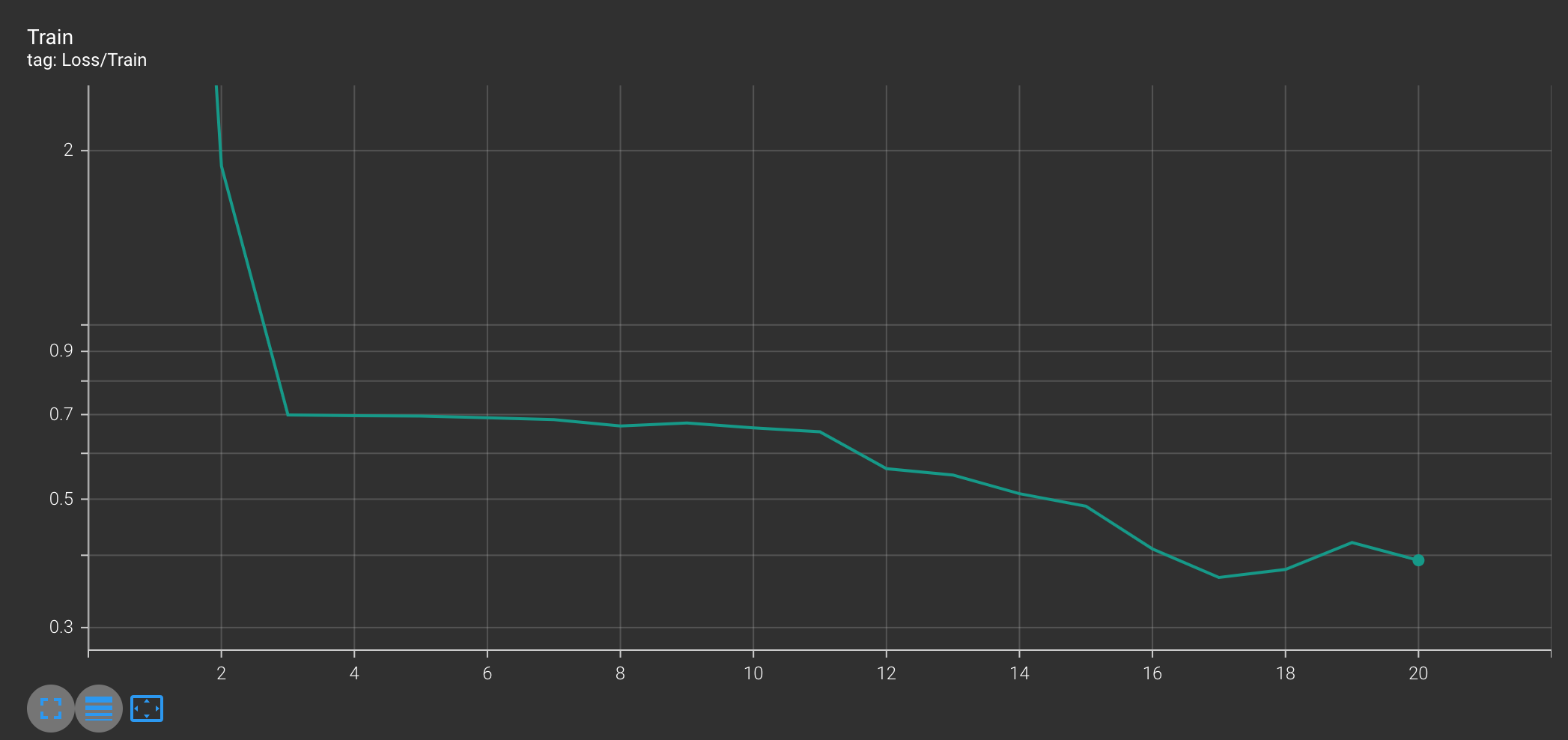

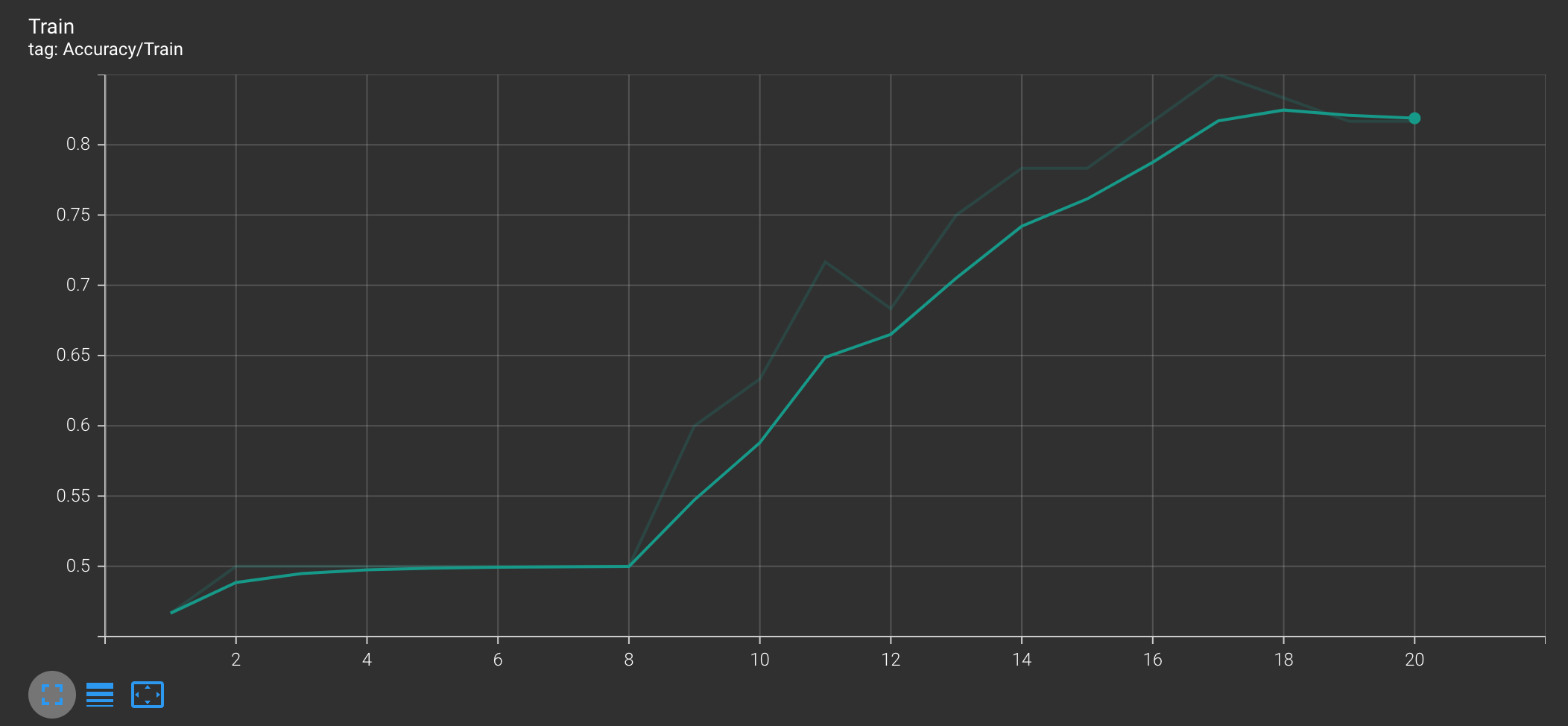

Train Epoch: 20 [60/60 (100%)] Loss: 0.353381 Accuracy: 80.0%

[Class: grime] accuracy: 80.0 %

[Class: jazz ] accuracy: 83.3 %

{'grime': 24, 'jazz': 25}

{'grime': 30, 'jazz': 30}

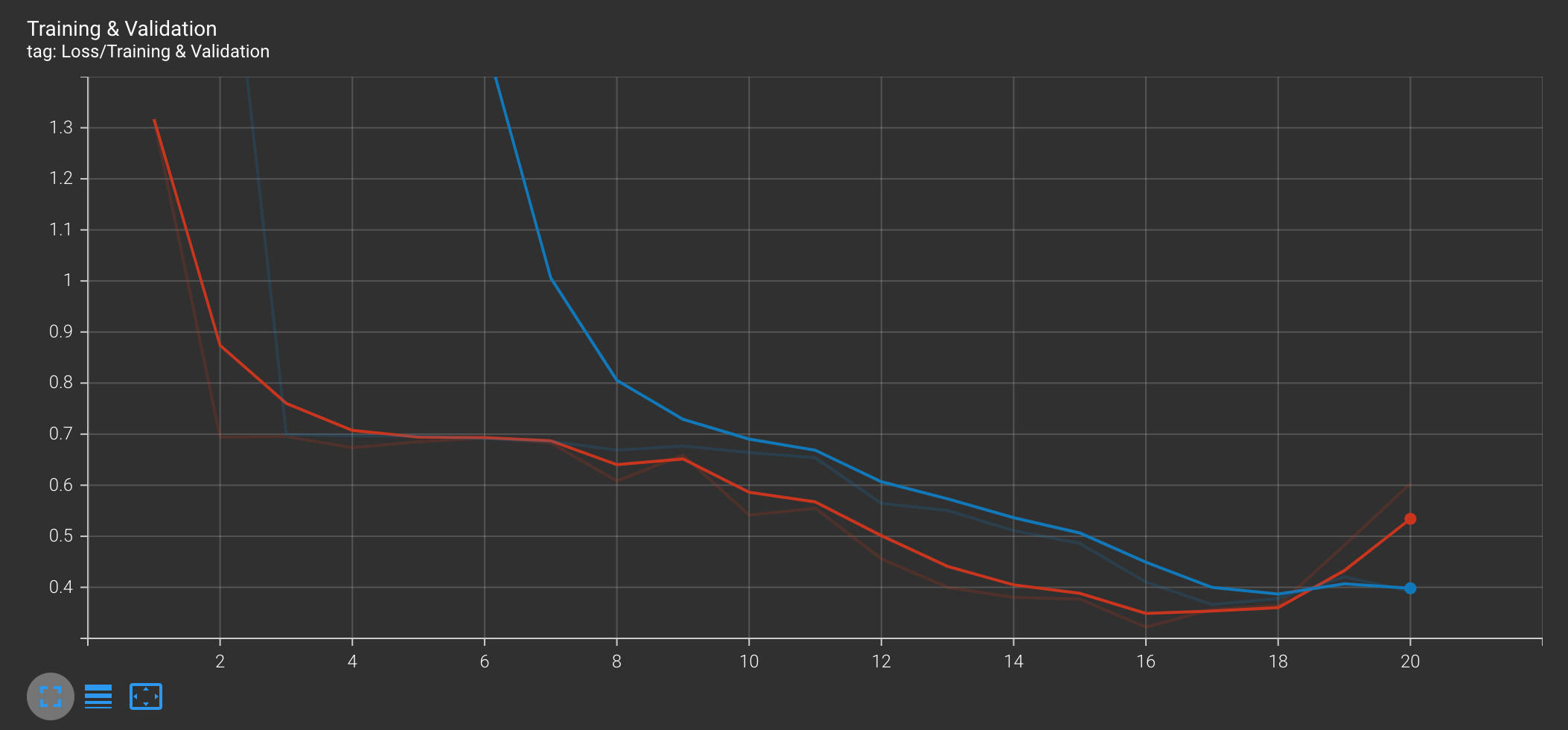

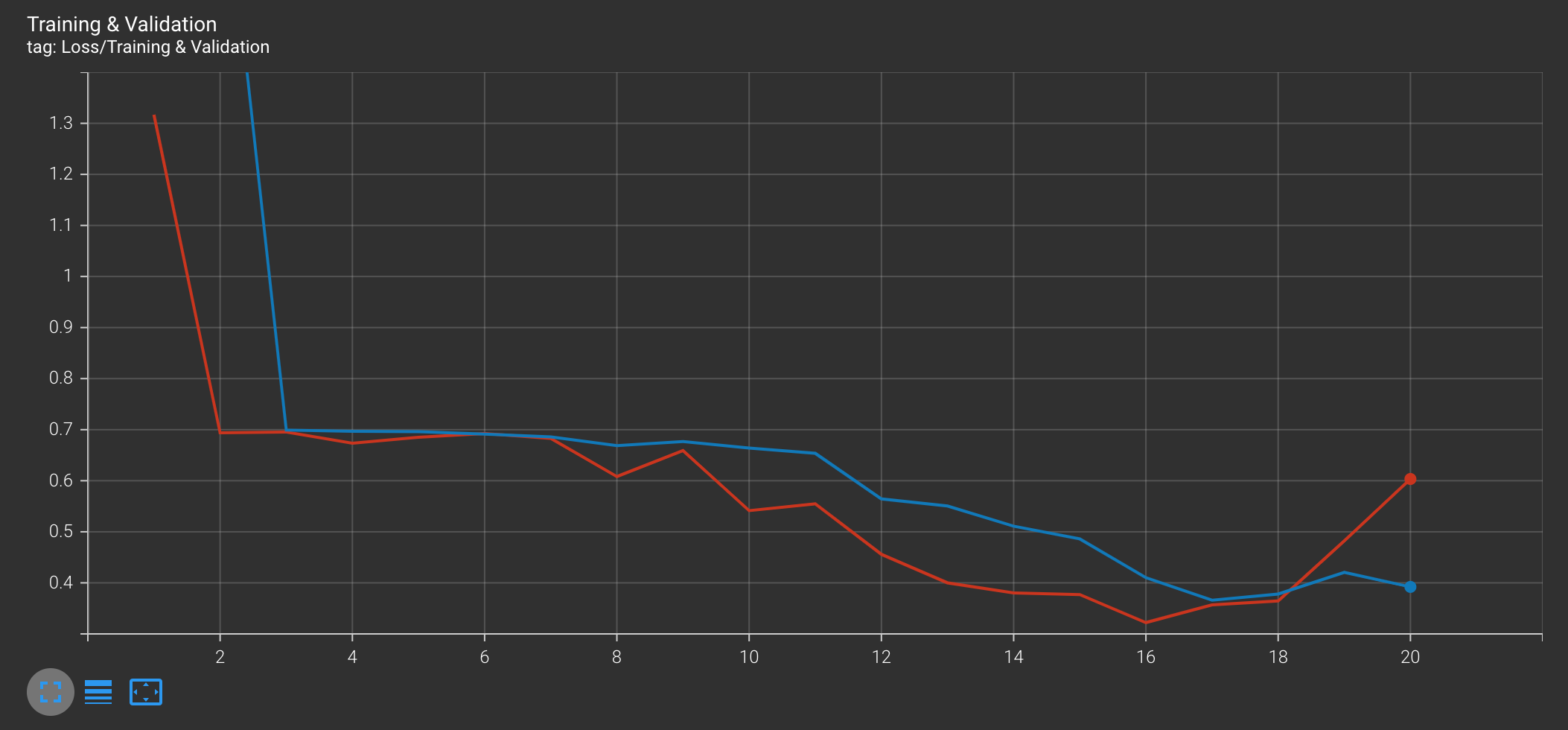

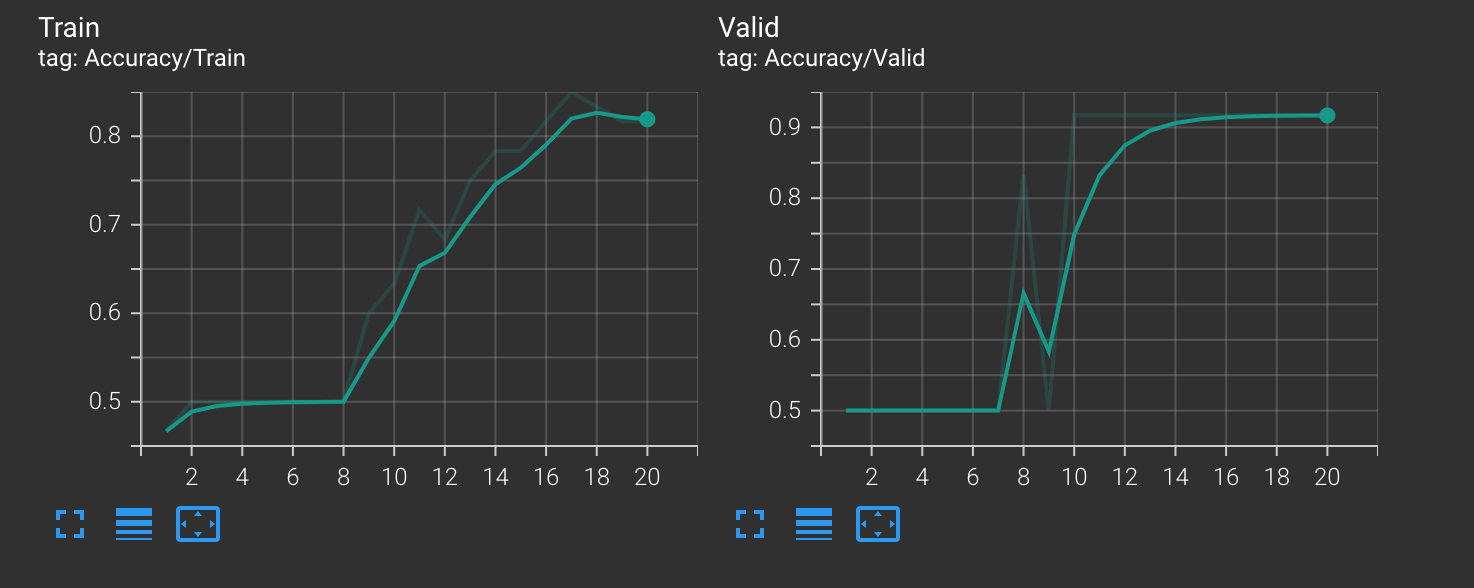

Training for Epoch: [20/20] Loss: 0.392063 Accuracy: 81.66666666666667%

[[=============================================================================================]]

Valid batch: 20 [6/12 (50%)] Loss: 1.069889 Accuracy: 83.33333333333334%

Valid batch: 20 [12/12 (100%)] Loss: 0.136948 Accuracy: 100.0%

Validation for Epoch: [20/20] Loss: 0.603418 Accuracy: 91.7%

[[=============================================================================================]]

{'grime': 5, 'jazz': 6}

{'grime': 6, 'jazz': 6}

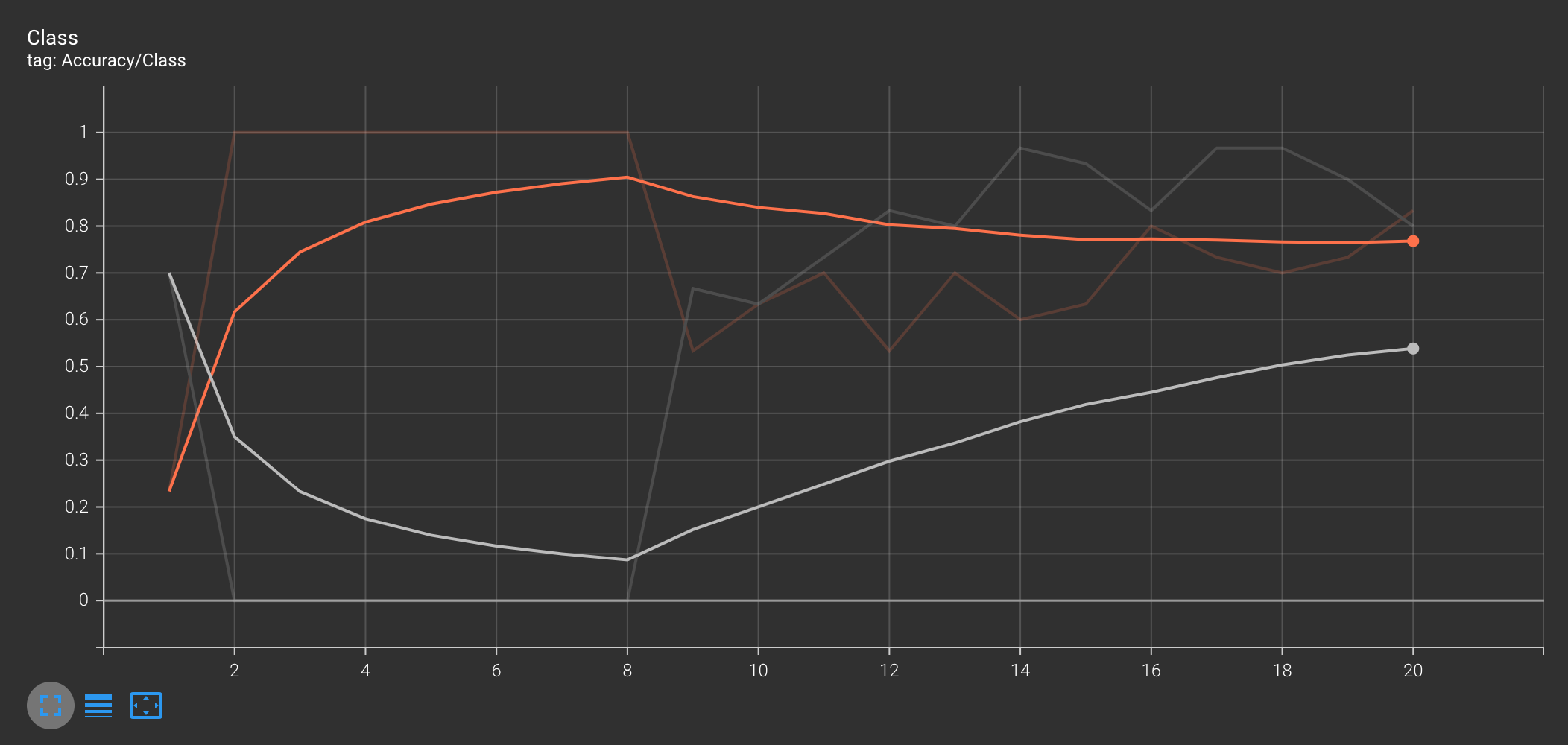

[[Validation]] Accuracy for class: grime is 83.3 %

[[Validation]] Accuracy for class: jazz is 100.0 %

LR: [0.001]

TRAINING LOSS: 0.39206255972385406

VALIDATION LOSS: 0.6034181341528893

Saving checkpoint: /Users/inhalt/Documents/Gendy/apps/MLVibeCaption/mlvcaudio/models/outputs/checkpoint-epoch20.pth ...

Training is done!

Model trained and stored!Cross entropy

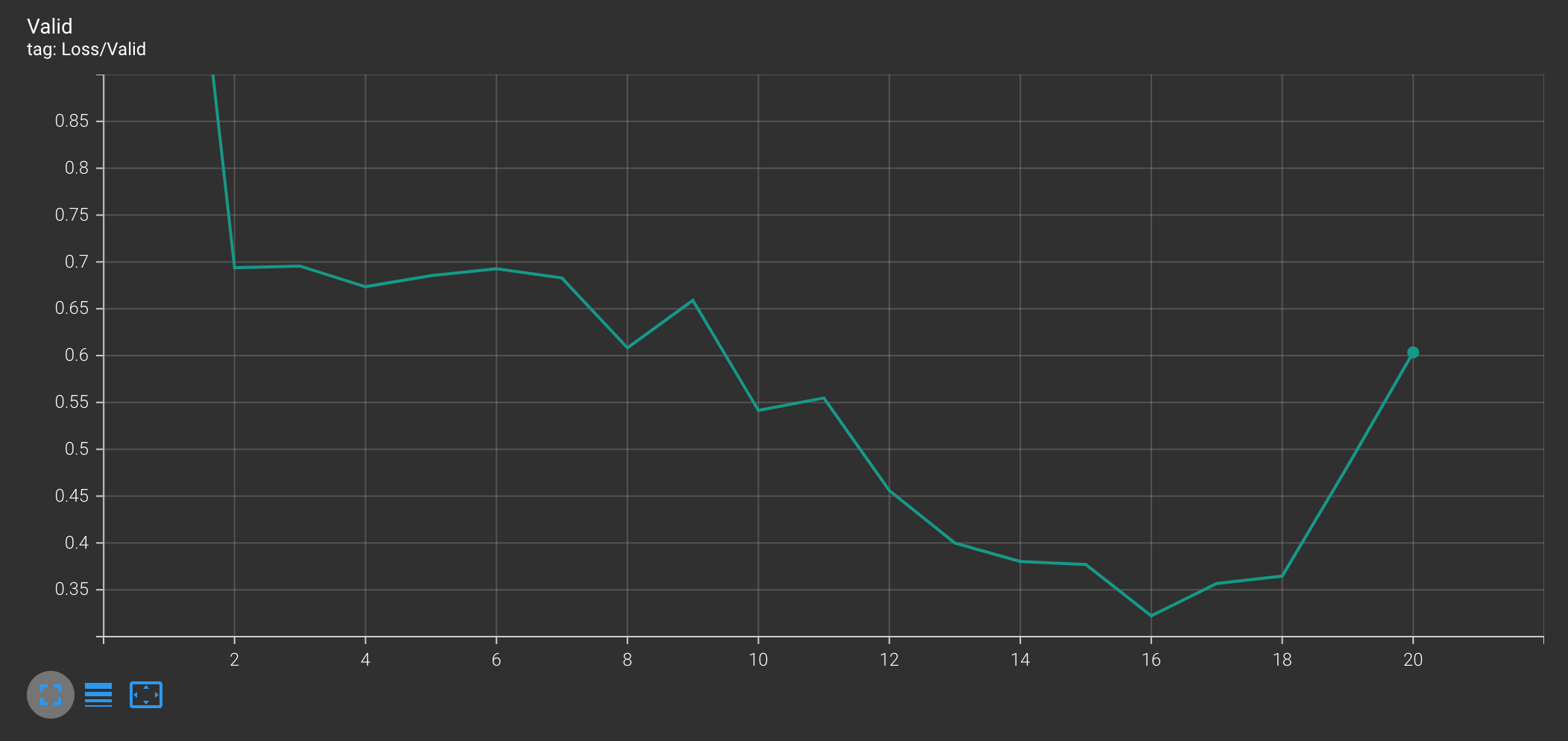

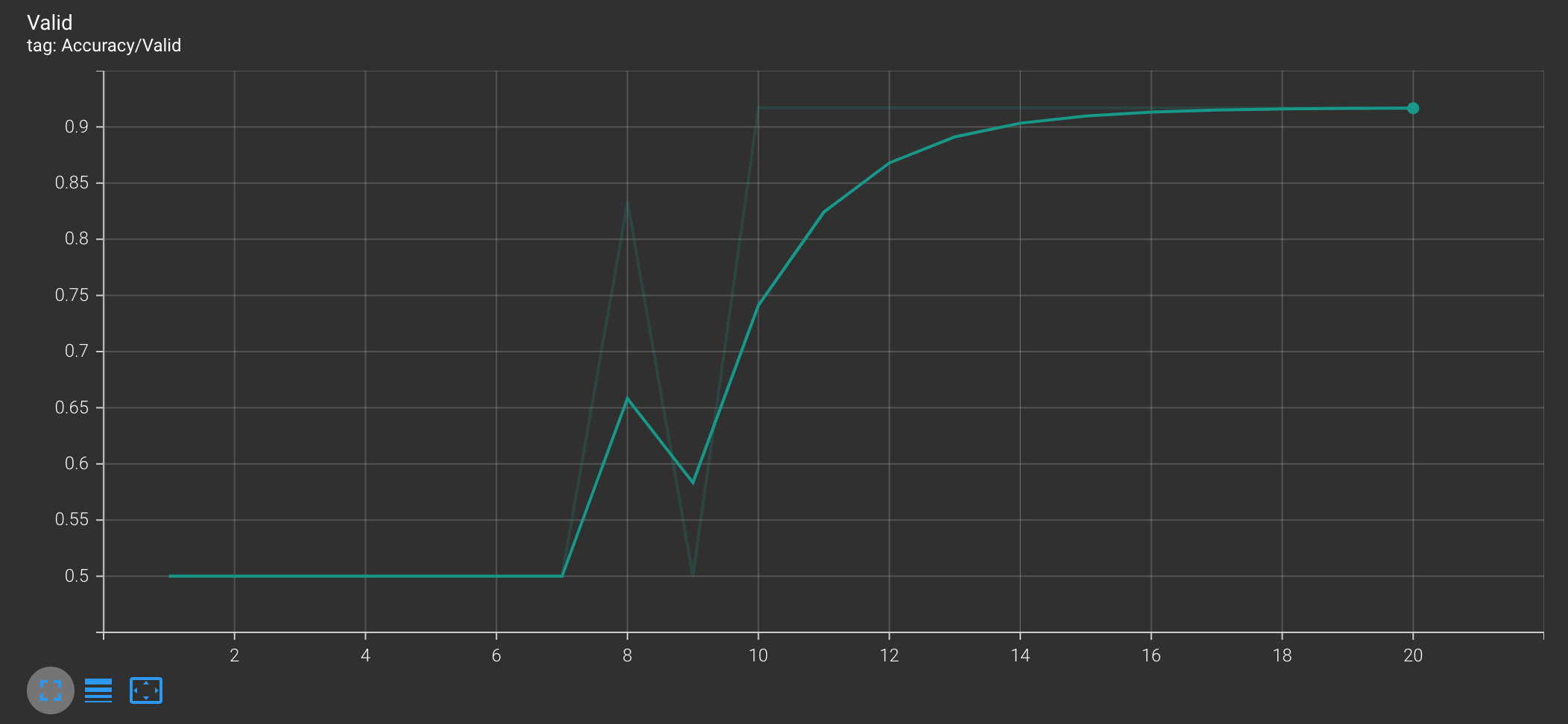

Training/Validation

Validation loss is lower than training set. Must redistribute train and validation sets. link (opens in a new tab)

Training

Validation

Accuracy

Class

Batch

Train Accuracy

Validation Accuracy

Confusion Matrix